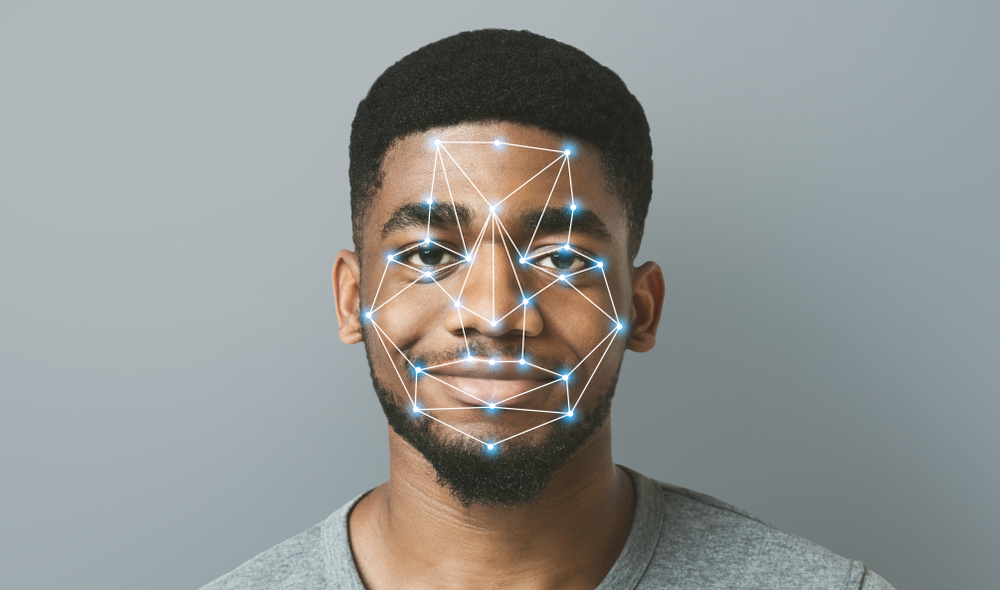

It has been revealed that software giant Google has been specifically canvassing members of the public with ‘darker skin’ to improve its software. As part of their upcoming Pixel 4 handset, facial recognition software is being pushed as a huge feature, and supposedly it is for this reason that Google has targeted skil colours.

Apparently, the company employed temporary workers, or ‘temps’, using the recruitment firm Randstad, then had them scan people’s faces. Those who agreed to participate would be given $5 gift cards in exchange for their effort.

However, it’s said that the temps were asked to specifically target students and homeless people who matched the desired profile. And supposedly they were encouraged not to disclose the true purpose of the research; instead, they were asked to question whether people would be interested in playing a ‘selfie game’ using the camera.

‘We were told not to tell them that it was video, even though it would say on the screen that a video was taken (…)’

A Google temp speaks to NY Daily News

Participants were given a specially-designed prototype handset that was used to improve Google’s facial recognition software, aiming to increase its accuracy. This software will form part of the Google Pixel 4’s security measures, as well as likely being used to group photos together where the same person is featured, something that’s currently a feature on the Pixel 3.

According to Google, the aim of the project was to ensure that the phone’s security measures “protect as wide a range of people as possible“.

‘It’s critical we have a diverse sample, which is an important part of building an inclusive product (…)’

A Google spokesperson

Some other media outlets who picked up this story have also claimed that one former member of the project said that they were asked to target homeless people and students “because they were less likely to speak to the media“. But unfortunately for Google, it seems that it was the tempts themselves that leaked this information.

Just earlier this month, a prominent member of congress in America lashed out at one law enforcement agency’s facial recognition software, as it was said to have a 60% error rate for those with coloured skin. Clearly, Google has to develop its software and needs the data to do so, however their ethics in carrying out this process could be called into question given how they’ve gone about it.

What do you think? Should Google have been completely open and honest about their reasons for conducting the study? Let us know your thoughts in the comments section below.

Recent Comments